Hardly a day goes by without artificial intelligence grabbing the headlines. Be it in process automation, medical diagnosis, autonomous transportation, language processing or even gaming, applications of advanced machine learning algorithms are ubiquitous. Outside of highly publicised advances such as ChatGPT, machine learning has equally spread across all branches of science and continues to provide a rich source of innovative research work. In this sense, batteries are no exception – AI has become commonplace in fields such as materials discovery and characterisation, optimisation of experimental design and manufacturing, as well as state of charge (SOC) and state of health (SOH) estimation. Given the all-pervasive character of AI/ML in current state-of-the-art battery research, it is worthwhile to take stock and critically assess the added value of these methods vis-à-vis their more conventional counterparts. With all the hype, it can be difficult to see the wood for the trees – where does the value of these ‘black-box’ methods lie? Can their performance in real-world scenarios be expected to match expectations from the sandbox (lab) settings? Here we offer brief insight into the potential benefits of AI/ML based methods in the field of SOH estimation, and highlight where they fall short, ultimately making the case that only through fusion of data-driven and model-driven approaches can we provide robust solutions for real-world battery systems.

Benefits of AI/ML

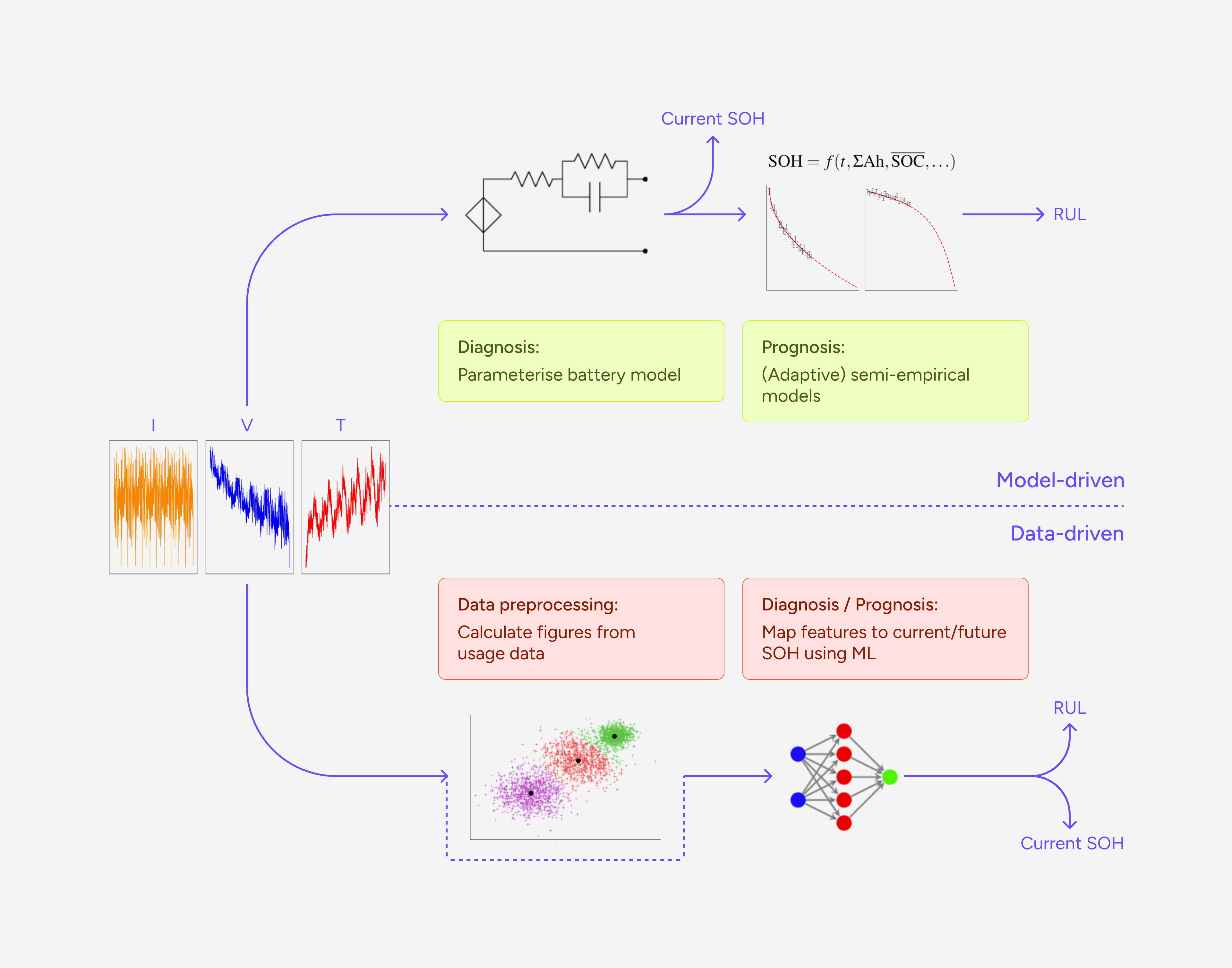

Machine learning methods can be broadly categorised into supervised and unsupervised learning algorithms. The former seeks to construct an arbitrary (usually non-linear) mapping from a set of inputs to their corresponding outputs, through minimising the input-output error. The latter methods in turn seek to learn patterns in the absence of labelled data. SOH estimation in battery systems is, in general, treated as a supervised learning problem, where the set of inputs consists of either raw current, voltage and temperature telemetry data or specific ‘features’ calculated from them. The output is then either the current or future SOH of the battery, both of which are difficult to accurately estimate in real-world operating environments, where accurate reference performance tests are unavailable and usage patterns and operating conditions vary. The data-driven paradigm stands in contrast to the more conventional ‘model-driven’ approach, which parameterises an explicit model describing the input/output response of a battery, and the gradual changes in parameters reflect the evolution of SOH. For the purpose of forecasting state of health, or remaining useful life, another model is required, which usually associates degradation in health with usage patterns (charging/discharging and storage behaviours) and environmental conditions (such as temperature). Figure 1 – Two contrasting approaches to SOH estimation – conventional, ‘model-driven’ approaches have explicit models describing battery i/o responses as well as battery ageing. Purely data-driven or AI/ML methods forego explicit models and employ supervised learning methods to learn mappings between inputs and outputs.

Aitio, A. (2023). Bayesian methods for battery state of health estimation [PhD thesis]. University of Oxford.

The main advantage of AI methods over their ‘model-driven’ counterparts is their flexibility – they make very few assumptions with respect to underlying functional forms that describe the input-output mappings. They can therefore express very complex relationships between say, usage and battery degradation, in a manner that would be difficult to reproduce through empirical or semi-empirical models2. In addition, ML models can be comparatively easy to parameterise with standard off-the-shelf methods. In contrast, accurate battery and ageing models, especially those based on physical first principles, can be time-consuming to simulate and very difficult to parameterise.

Figure 1 – Two contrasting approaches to SOH estimation – conventional, ‘model-driven’ approaches have explicit models describing battery i/o responses as well as battery ageing. Purely data-driven or AI/ML methods forego explicit models and employ supervised learning methods to learn mappings between inputs and outputs.

Aitio, A. (2023). Bayesian methods for battery state of health estimation [PhD thesis]. University of Oxford.

The main advantage of AI methods over their ‘model-driven’ counterparts is their flexibility – they make very few assumptions with respect to underlying functional forms that describe the input-output mappings. They can therefore express very complex relationships between say, usage and battery degradation, in a manner that would be difficult to reproduce through empirical or semi-empirical models2. In addition, ML models can be comparatively easy to parameterise with standard off-the-shelf methods. In contrast, accurate battery and ageing models, especially those based on physical first principles, can be time-consuming to simulate and very difficult to parameterise.

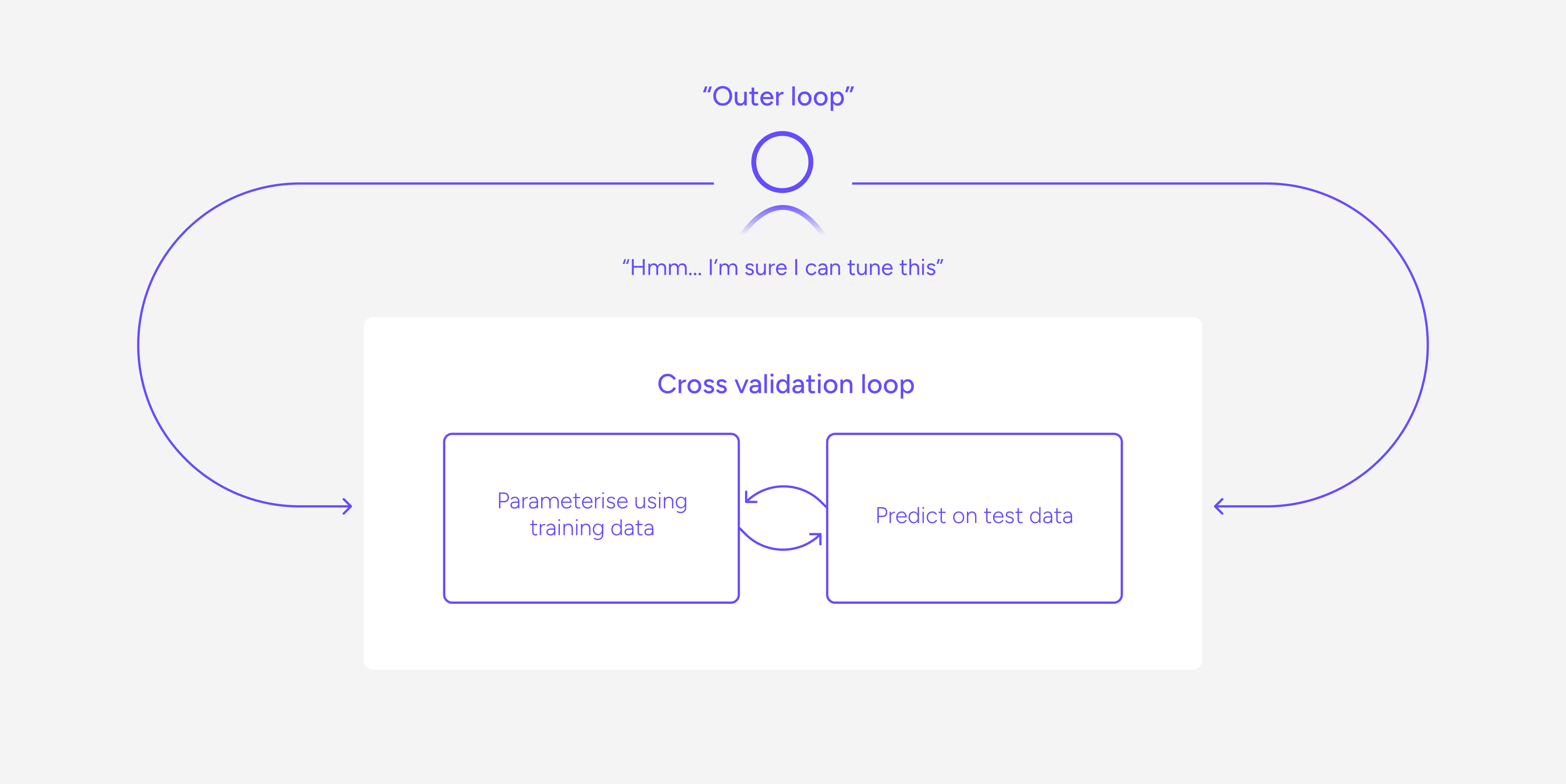

The price of flexibility

The major advantage of AI based models comes with a significant drawback. The infinite flexibility to map complicated input-output relationships, foregoing conventional models relying on domain knowledge (or indeed any fixed functional form), means that one can always find a good fit. Given the arbitrariness of the solution, there is then no guarantee of predictive performance outside the dataset used for training. While many techniques exist to mitigate this overfitting to training data, usually within the commonly used cross-validation cycle, they require significant tuning or ‘steering’ effort on behalf of the human modeller. In other words, human effort is essential in narrowing down the flexibility.

The issue with data

Supervised learning algorithms require a labelled input-output data to train, and predictive performance in situ is largely determined by the coverage of the training set. For SOH estimation in lab conditions, this is relatively easy to obtain by conducting experimental ageing campaigns with periodic reference performance tests such as constant current discharge tests for capacity or pulse tests to measure DC resistance and EIS to measure complex impedance. However, in real-world operating conditions these are not usually available. The lack of validation data is particularly acute in trying to predict extreme or rare events, such as thermal runaway. To mitigate against the lack of labelled data, it is possible to collect training data in the lab — in practice, however, this is not practical. For example, on RUL forecasting, accelerated ageing campaigns using large numbers of cells have been used to identify features in voltage data during early life that determine the probability of early failure. However, it is dubious whether such experimental work is of use in the real world. To shorten the duration of ageing campaigns, the chosen cycling profiles are clearly not representative of automotive or standard stationary use cases. In reality, battery systems experience broad ranges and complicated combinations of temperatures, C-rates, storage SOCs etc. – it is not feasible to collect experimental data for all possible combinations of stress factors encountered in situ. Even in the purely diagnostic case, having access features related to current state of health (devised in lab conditions) is by no means guaranteed in the real-world, where cell current and voltage measurements may be sampled at lower frequencies and for parallel configurations, where the net effect is to blur out many features of interest.Expressive, yes, but insightful?

While AI methods are by design highly expressive, their black-box nature inevitably reduces the insight derived from them. For example, sensitivity analysis, gauging the effect on predictions due to changes in inputs, only applies locally in the nonlinear case. In other words, the sensitivity to a single input depends on the specific combination of all the others. This makes it challenging to gain intuition on model behaviour. This type of insight is crucial in the eventual decision process faced by stakeholders, who seek to understand which factors most significantly affect the estimates of the current (and future) health of their assets — a monolithic, one-dimensional health estimate is insufficient when it is impossible to deconstruct to its components.Shades of grey

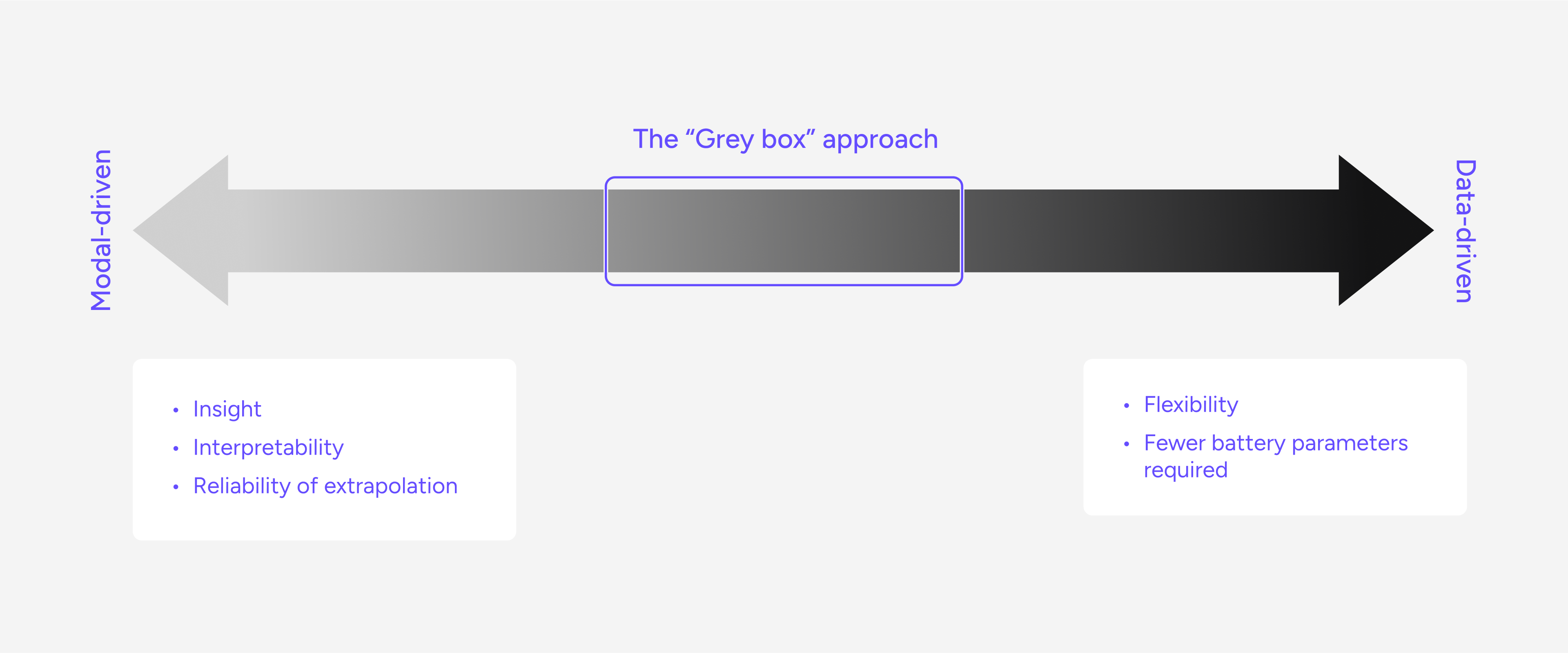

A purely data-driven approach to SOH estimation comes with several pitfalls. However, its inherent flexibility can be hugely valuable. A well targeted application of AI, in our view, is advantageous only when combined with battery domain knowledge. But machine learning should not be the starting point. Instead, we prefer a ‘bottom-up’ approach, relying on comprehensive first-principles understanding of battery systems, ranging from cell electrochemistry to pack integration. This allows us to prioritise well-targeted insights incorporating the state-of-the-art of battery research. AI/ML can then be applied to describe those relationships we know are not adequately modelled by first-principles.

So… it’s not all bad news

AI is transforming the battery SOH estimation landscape. But in doing so, researchers and industry alike face the risk of putting the cart before the horse – the role of AI should not necessarily be to re-invent existing physics-based or empirical models, but rather to augment them where appropriate. In this sense, there are enormous gains to be had in improving SOH diagnostic and prognostic accuracy, while retaining the intuitive understanding of battery behaviour, which will ultimately improve the decision-making process for those seeking to extract the most of their battery storage assets. 1 Zhang, D., Mishra, S., Brynjolfsson, E., Etchemendy, J., Ganguli, D., Grosz, B., Lyons, T., Manyika, J., Niebles, J. C., Sellitto, M., Shoham, Y., Clark, J., Perrault, R. (2021). 2021 AI Index Report. 2 Schimpe, M., von Kuepach, M. E., Naumann, M., Hesse, H. C., Smith, K., Jossen, A. (2018). Comprehensive Modeling of Temperature-Dependent Degradation Mechanisms in Lithium Iron Phosphate Batteries. Journal of The Electrochemical Society (2), A181–A193. 3 Severson, K. A., Attia, P. M., Jin, N., Perkins, N., Jiang, B., Yang, Z., Chen, M. H., Aykol, M., Herring, P. K., Fraggedakis, D., Bazant, M. Z., Harris, S. J., Chueh, W. C.; Braatz, R. D. (2019). Data-driven prediction of battery cycle life before capacity degradation. Nature Energy 4 (5), 383–391. Don’t forget to catch Elysia Battery Intelligence from Fortescue Zero as the Platinum Sponsors at MOVE America 2024 at the Austin Convention Center, 24-25 September, Austin, TX for more exclusive insights on the future of batteries.Sign up MOVEMNT’s newsletter for the hottest mobility news and exclusive MOVE event updates every Tuesday and Thursday